Exploratory Data Analyis of MasteryConnect Student Test Data#

Problem Statement#

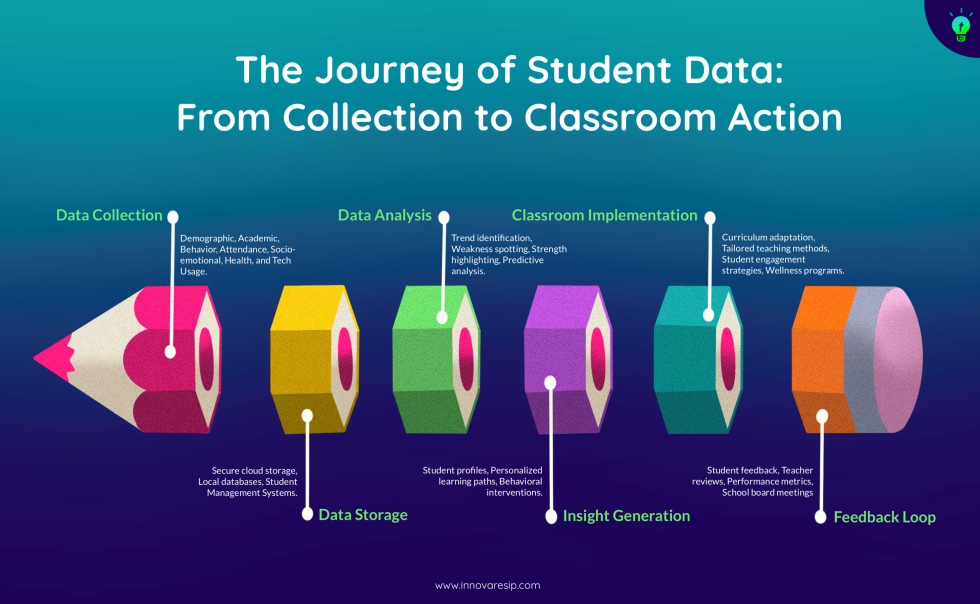

Teachers make on average, around 1500 decisions per day link This may seem like a dramatic statistic to some people outside the profession, but it is an enduring reality for those teachers who give their all in pursuit of positive academic outcomes. Tracking and monitoring student performance is crucial to acheiving this, however, manually doing so throughout the year, is not only challenging, but quite difficult to sustain. This project aims to alleviate this burden by:

Collecting CSV assessment data from Mastery Connect

Processing and uploading datasets to MongoDB

Generating targeted insights using MongoDB’s aggregation pipelines and pandas (a Python data manipulation library)

Presenting these insights through intuitive visualizations

The key performance indicators generated by this system, such as standard mastery rates, question type proficiency, and individual student growth trajectories are invaluable insights that will empower teachers to implement data-driven classroom strategies, help students understand their progress, and allow administrators to identify broader trends.

Designed with scalability in mind, the system can potentially extend to multiple classrooms or entire school districts, significantly reducing decision fatigue for educators.

Analysis Topic |

Question |

Approach |

|---|---|---|

Overall Performance Analysis |

What is the overall distribution of student performance across all tests? |

Analyze descriptive statistics of overall scores and visualize the distribution. |

Standard-Based Performance Analysis |

How do students perform across different biology standards? |

a) Calculate and visualize average performance for each standard. |

Question Type Analysis |

How does performance vary across different question types? |

Calculate and visualize difficulty rankings for different question types. |

Performance Analysis Over Time |

How has student performance changed over time? |

Analyze and visualize performance trends over time for all students. |

Test Participation Analysis |

Are there patterns in test participation or missed tests? |

Identify and analyze data for students who did not complete certain tests. |

Depth of Knowledge (DOK) Analysis |

How does performance correlate with the depth of knowledge required? |

Analyze performance across different DOK levels. |

Class and Teacher Impact Analysis |

Are there significant performance differences across classes or teachers? |

Compare performance metrics across different classes and teachers. |

Standard Coverage Analysis |

Which standards are most frequently tested, and how does this relate to performance? |

Analyze the frequency of questions for each standard and correlate with performance data. |

Individual Student Progress Tracking |

How can we visualize and analyze individual student progress over time? |

Develop interactive visualizations for tracking individual student performance across tests and standards. |

Test Design Analysis |

Are there patterns in test composition that correlate with overall performance? |

Analyze the relationship between test characteristics (e.g., question types, standards covered) and overall performance. |

Imports#

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

import os

import sys

from dotenv import load_dotenv

from IPython.display import display

sys.path.append(os.path.abspath(os.path.join(os.getcwd(), '..')))

from src.components.db_connection import get_database, build_query

from src.pipelines import mongo_db_pipelines as m_db

from src.components.data_ingestion import drop_collection

Initialize database#

db = get_database(create_indices=True)

students = db['students']

tests = db['tests']

[ 2024-08-03 08:48:18,173 ] 29 my_logger - INFO - Successfully connected to MongoDB

Filter data for particular teacher(s) or time period#

load_dotenv()

teacher = os.getenv('TEACHER_2')

class_name = ''

start_date = ''

query = build_query(teacher=teacher, class_name=class_name, start_date=start_date)

Statistical analysis#

numeric data across all classes#

from src.utils import describe_numeric_field_pandas

# Average scores on tests

overall_scores_stats = describe_numeric_field_pandas(students, 'overall_percentage', query)

display(pd.DataFrame(overall_scores_stats))

| 0 | |

|---|---|

| count | 7729.000000 |

| mean | 63.818735 |

| std | 22.409500 |

| min | 0.000000 |

| 25% | 47.000000 |

| 50% | 67.000000 |

| 75% | 80.000000 |

| max | 100.000000 |

| skewness | -0.287499 |

| kurtosis | -0.727309 |

Frequency distribution across all classes#

This shows the distribution of fields (count values) by collection

from src.utils import get_frequency_distribution

# MongoDB pipeline for frequency distribution

standard_dist = get_frequency_distribution(db=db, field='questions', subfield='standard', teacher=teacher)

item_type_dist = get_frequency_distribution(db=db, field='questions', subfield='item_type_name', teacher=teacher)

dok_dist = get_frequency_distribution(db=db, field='questions', subfield='dok', teacher=teacher)

# Renaming primary key

standard_dist = pd.DataFrame(standard_dist).rename(columns={'_id':'standards'})

item_type_dist = pd.DataFrame(item_type_dist).rename(columns={'_id':'item_type'})

dok_dist = pd.DataFrame(dok_dist).rename(columns={'_id':'dok'})

print("Frequency of Course Standards")

display(pd.DataFrame(standard_dist).T)

print("Distribution of Question types")

display(pd.DataFrame(item_type_dist))

print("Depth of knowledge Frequency")

display(pd.DataFrame(dok_dist))

Frequency of Course Standards

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | ... | 94 | 95 | 96 | 97 | 98 | 99 | 100 | 101 | 102 | 103 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| standards | Bio.4.1.1 | Bio.3.2.2 | Bio.1.1.1 | Bio.4.2.1 | Bio.1.1.2 | Chm.1.1.1 | Bio.4.1.3 | Bio.1.2.1 | Bio.1.2.2 | Bio.3.2.1 | ... | EEn.2.3.2 | PSc.3.3.5 | EEn.2.2.2 | PSc.3.3.1 | EEn.2.4.2 | Chm.1.2.2 | PSc.2.3.2 | EEn.2.1.2 | Chm.1.2.3 | Chm.3.2.2 |

| count | 384 | 363 | 308 | 304 | 295 | 281 | 275 | 239 | 205 | 202 | ... | 6 | 6 | 4 | 4 | 4 | 4 | 2 | 2 | 1 | 1 |

2 rows × 104 columns

Distribution of Question types

| item_type | count | |

|---|---|---|

| 0 | Multiple Choice | 3494 |

| 1 | Multiple Choice Question | 2061 |

| 2 | Multiple Choice (Single Response) | 318 |

| 3 | Match List | 122 |

| 4 | Label Image with Drag and Drop | 86 |

| 5 | Choice Matrix | 20 |

| 6 | Cloze Association | 16 |

| 7 | Multi Select | 15 |

| 8 | Order List | 15 |

| 9 | Highlight | 12 |

| 10 | Sort List | 3 |

| 11 | Cloze Dropdown | 2 |

| 12 | Classification | 2 |

Depth of knowledge Frequency

| dok | count | |

|---|---|---|

| 0 | NaN | 4978 |

| 1 | 2 | 626 |

| 2 | 3 | 303 |

| 3 | 1 | 251 |

| 4 | 4 | 8 |

Distribution Visualizations#

from src.utils import create_frequency_distributions_plot

fig_distributions = create_frequency_distributions_plot(standard_dist, item_type_dist, dok_dist)

display(fig_distributions)

Performance over time for all students#

Aggreation of test results by date, not including students who missed a test (NaN values filtered out)

from src.utils import performance_trend

# Run the performance trend analysis

performance_trend_df = pd.DataFrame(performance_trend(students, query))

print('First 5 tests by date and average')

display(performance_trend_df.head(5))

print('Last 5 tests by date and average')

display(performance_trend_df.tail(5))

First 5 tests by date and average

| date | count | avg_performance | min_value | max_value | |

|---|---|---|---|---|---|

| 0 | 2016-09-29 | 80 | 62.575000 | 25.0 | 94.0 |

| 1 | 2016-10-04 | 74 | 33.918919 | 5.0 | 65.0 |

| 2 | 2016-10-18 | 80 | 69.200000 | 17.0 | 92.0 |

| 3 | 2016-10-27 | 84 | 73.642857 | 33.0 | 100.0 |

| 4 | 2016-11-14 | 72 | 37.861111 | 7.0 | 78.0 |

Last 5 tests by date and average

| date | count | avg_performance | min_value | max_value | |

|---|---|---|---|---|---|

| 142 | 2024-05-14 | 16 | 61.625000 | 27.0 | 72.0 |

| 143 | 2024-05-15 | 12 | 81.166667 | 73.0 | 90.0 |

| 144 | 2024-05-17 | 16 | 73.375000 | 48.0 | 88.0 |

| 145 | 2024-05-23 | 25 | 55.720000 | 22.0 | 85.0 |

| 146 | 2024-05-24 | 14 | 62.357143 | 35.0 | 78.0 |

Vizualization for performance trend overtime#

from src.utils import create_performance_trend_plot

fig_performance = create_performance_trend_plot(performance_trend_df)

display(fig_performance)

Students who did not complete a test#

from src.utils import identify_students_with_nan, check_responses_for_nan

missed_test = identify_students_with_nan(students, 'overall_percentage')

# convert to data frame

missed_test_df = pd.DataFrame(missed_test)

Anonymizing Data#

import random

from faker import Faker

fake = Faker()

# Custom function to generate fake test IDs

def fake_test_id():

class_names = ['Biology', 'Chemistry', 'Anatomy']

test_names = ['Midterm', 'Final', 'Quiz', 'Project', 'Exam']

class_name = random.choice(class_names)

test_name = random.choice(test_names)

fake_date = fake.date_between(start_date='-1y', end_date='today').strftime('%Y%m%d')

return f"{class_name}_{test_name}_{fake_date}"

# Create mappings for each column

def create_mapping(original_values, fake_function):

return {value: fake_function() for value in set(original_values)}

student_id_mapping = create_mapping(missed_test_df['student_id'], lambda: f"S-{fake.numerify('######')}")

first_name_mapping = create_mapping(missed_test_df['first_name'], fake.first_name)

last_name_mapping = create_mapping(missed_test_df['last_name'], fake.last_name)

teacher_mapping = create_mapping(missed_test_df['teacher'], fake.name)

test_id_mapping = create_mapping(missed_test_df['test_id'], fake_test_id)

# Apply mappings to create anonymized dataframe

anonymized_df = missed_test_df.copy()

anonymized_df['student_id'] = anonymized_df['student_id'].map(student_id_mapping)

anonymized_df['first_name'] = anonymized_df['first_name'].map(first_name_mapping)

anonymized_df['last_name'] = anonymized_df['last_name'].map(last_name_mapping)

anonymized_df['teacher'] = anonymized_df['teacher'].map(teacher_mapping)

anonymized_df['test_id'] = anonymized_df['test_id'].map(test_id_mapping)

# Display the result

display(anonymized_df)

| student_id | first_name | last_name | teacher | test_id | date | |

|---|---|---|---|---|---|---|

| 0 | S-234271 | Megan | Barber | Mrs. Jennifer Atkinson | Anatomy_Final_20231219 | 2023-12-04 |

| 1 | S-234271 | Megan | Barber | Mrs. Jennifer Atkinson | Biology_Midterm_20231023 | 2023-12-18 |

| 2 | S-234271 | Megan | Barber | Mrs. Jennifer Atkinson | Biology_Quiz_20240106 | 2023-10-30 |

| 3 | S-234271 | Megan | Barber | Mrs. Jennifer Atkinson | Chemistry_Quiz_20231113 | 2023-10-20 |

| 4 | S-234271 | Megan | Barber | Mrs. Jennifer Atkinson | Anatomy_Exam_20230817 | 2023-09-29 |

| ... | ... | ... | ... | ... | ... | ... |

| 11175 | S-981056 | Kayla | Lawson | Juan Brooks | Biology_Exam_20230923 | 2016-10-04 |

| 11176 | S-981056 | Kayla | Lawson | Juan Brooks | Anatomy_Project_20231219 | 2016-10-18 |

| 11177 | S-981056 | Kayla | Lawson | Juan Brooks | Chemistry_Quiz_20231113 | 2016-09-29 |

| 11178 | S-981056 | Kayla | Lawson | Juan Brooks | Biology_Project_20240512 | 2016-11-14 |

| 11179 | S-981056 | Kayla | Lawson | Juan Brooks | Chemistry_Project_20231231 | 2016-10-27 |

11180 rows × 6 columns

Test Overview Interactive table#

Which tests did students miss?

from src.utils import display_interactive_student_table

# display_interactive_student_table(anonymized_df, width='80%', height= '400px')

Addirional test stats#

What tests did each student miss?

Overall Performance by Standard:#

Question: How has each student performed per standard?

# Execute the pipeline

pipeline = m_db.comprehensive_test_analysis_pipeline(query)

results = list(students.aggregate(pipeline))

# Convert to DataFrame

df = pd.DataFrame(results)

Anonymizing Dataframe to protect sensitive data.#

import hashlib

def anonymize_df(df):

# Create a copy of the dataframe

anon_df = df.copy()

# Create mappings for names and IDs

student_id_map = {id: hashlib.md5(str(id).encode()).hexdigest()[:8] for id in df['student_id'].unique()}

first_name_map = {name: fake.first_name() for name in df['first_name'].unique()}

last_name_map = {name: fake.last_name() for name in df['last_name'].unique()}

class_name_map = {class_name: f"Fake-class{fake.numerify('####')}" for class_name in df['class_name'].unique()}

# Create a mapping for test_ids to ensure consistency

test_id_map = {}

for test_id in df['test_id'].unique():

parts = test_id.split('_')

if len(parts) >= 3:

new_test_id = f"{class_name_map.get(parts[0], parts[0])}_{fake.word().capitalize()}_{fake.date_this_year().strftime('%Y%m%d')}"

else:

new_test_id = f"Test_{fake.word().capitalize()}_{fake.date_this_year().strftime('%Y%m%d')}"

test_id_map[test_id] = new_test_id

# Apply mappings

anon_df['student_id'] = anon_df['student_id'].map(student_id_map)

anon_df['first_name'] = anon_df['first_name'].map(first_name_map)

anon_df['last_name'] = anon_df['last_name'].map(last_name_map)

anon_df['class_name'] = anon_df['class_name'].map(class_name_map)

anon_df['test_id'] = anon_df['test_id'].map(test_id_map)

# Update test_name based on the new test_id

anon_df['test_name'] = anon_df['test_id'].apply(lambda x: ' '.join(x.split('_')[1:-1]))

return anon_df

# Apply the anonymization

anonymized_df2 = anonymize_df(df)

# Display the result

display(anonymized_df2.head(5))

| _id | student_id | first_name | last_name | test_id | test_name | class_name | question_id | question_type | student_response | correct_answer | standard | is_correct | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 6696bef5d6f16ed5d78693c9 | e6a94901 | Gwendolyn | Harvey | Fake-class4559_Gas_20240110 | Gas | Fake-class4559 | Q1 | Multiple Choice Question | B | B | Bio.1.1.1 | True |

| 1 | 6696bef5d6f16ed5d78693c9 | e6a94901 | Gwendolyn | Harvey | Fake-class4559_Gas_20240110 | Gas | Fake-class4559 | Q10 | Multiple Choice Question | A | A | Bio.3.2.1 | True |

| 2 | 6696bef5d6f16ed5d78693c9 | e6a94901 | Gwendolyn | Harvey | Fake-class4559_Gas_20240110 | Gas | Fake-class4559 | Q11 | Multiple Choice Question | A | D | Bio.3.2.2 | False |

| 3 | 6696bef5d6f16ed5d78693c9 | e6a94901 | Gwendolyn | Harvey | Fake-class4559_Gas_20240110 | Gas | Fake-class4559 | Q12 | Multiple Choice Question | C | B | Bio.3.3.2 | False |

| 4 | 6696bef5d6f16ed5d78693c9 | e6a94901 | Gwendolyn | Harvey | Fake-class4559_Gas_20240110 | Gas | Fake-class4559 | Q13 | Multiple Choice Question | A | A | Bio.3.3.3 | True |

# General test analysis data (note: anonymized df is being used instead of df)

performance_by_standard = anonymized_df2.groupby(['student_id', 'first_name', 'last_name', 'standard', 'class_name']).agg({

'is_correct': ['mean', 'count']

}).reset_index()

performance_by_standard.columns = ['student_id', 'first_name', 'last_name', 'standard', 'class_name', 'avg_performance', 'total_questions']

performance_by_standard['avg_performance'] *= 100 # Convert to percentage

performance_by_standard['avg_performance'] = performance_by_standard['avg_performance'].round(2) # Round off averages

display(performance_by_standard.head(5))

| student_id | first_name | last_name | standard | class_name | avg_performance | total_questions | |

|---|---|---|---|---|---|---|---|

| 0 | 00572bac | Laura | Spencer | Chm.1.1.1 | Fake-class4054 | 100.0 | 12 |

| 1 | 00572bac | Laura | Spencer | Chm.1.1.3 | Fake-class4054 | 0.0 | 2 |

| 2 | 00572bac | Laura | Spencer | Chm.1.1.4 | Fake-class4054 | 100.0 | 2 |

| 3 | 00572bac | Laura | Spencer | Chm.1.2.1 | Fake-class4054 | 0.0 | 2 |

| 4 | 00572bac | Laura | Spencer | Chm.1.2.4 | Fake-class4054 | 0.0 | 4 |

Visualization of performance by standards for all students of all classes#

from src.utils import create_performance_dash_app

# create_performance_dash_app(performance_by_standard)

Overall Performance by Standard:#

Question: Who are the top performing students for each standard?

# Using Fake data (anonymized_df2 vs df)

def get_top_n_students(anonymized_df2, n=5, upper=True):

# Create a rank within each standard

anonymized_df2['rank'] = anonymized_df2.groupby('standard')['avg_performance'].rank(method='first', ascending=not upper)

# Filter to keep only the top/bottom n ranks

result = anonymized_df2[anonymized_df2['rank'] <= n]

# Sort the final result to maintain the desired order

result = result.sort_values(['standard', 'avg_performance', 'total_questions'],

ascending=[True, not upper, False])

# Drop the temporary rank column

result = result.drop('rank', axis=1)

return result.reset_index(drop=True)

# Get top 5 students for each standard

top_5_students = get_top_n_students(performance_by_standard, n=5, upper=True)

print("Top 5 Performing Students for Each Standard (first four shown):")

display(top_5_students.head(20))

Top 5 Performing Students for Each Standard (first four shown):

| student_id | first_name | last_name | standard | class_name | avg_performance | total_questions | |

|---|---|---|---|---|---|---|---|

| 0 | 53beba4a | Michelle | White | Bio.1.1.1 | Fake-class2111 | 100.0 | 39 |

| 1 | 94a5fc51 | Alexa | Johnson | Bio.1.1.1 | Fake-class2111 | 100.0 | 39 |

| 2 | 1bc64804 | Brian | Carr | Bio.1.1.1 | Fake-class3907 | 100.0 | 3 |

| 3 | 1f1632cf | Anne | Roberts | Bio.1.1.1 | Fake-class3907 | 100.0 | 3 |

| 4 | b36f948a | Gregory | Elliott | Bio.1.1.1 | Fake-class3907 | 100.0 | 3 |

| 5 | 356940e2 | Dawn | Patel | Bio.1.1.2 | Fake-class2111 | 100.0 | 38 |

| 6 | 1f2dd468 | Roberta | Smith | Bio.1.1.2 | Fake-class8861 | 100.0 | 14 |

| 7 | 17c27f6b | Diane | Hall | Bio.1.1.2 | Fake-class9903 | 100.0 | 6 |

| 8 | 40765158 | Caroline | Jensen | Bio.1.1.2 | Fake-class9903 | 100.0 | 6 |

| 9 | 17403e5a | Julie | Diaz | Bio.1.1.2 | Fake-class9903 | 100.0 | 3 |

| 10 | 0b1640fc | Sarah | King | Bio.1.1.3 | Fake-class1148 | 100.0 | 8 |

| 11 | 1848863e | Johnny | Walton | Bio.1.1.3 | Fake-class7747 | 100.0 | 2 |

| 12 | 0b4a7ae3 | Derek | Little | Bio.1.1.3 | Fake-class5191 | 100.0 | 1 |

| 13 | 11c7c31e | Natalie | Morales | Bio.1.1.3 | Fake-class5191 | 100.0 | 1 |

| 14 | 17403e5a | Julie | Diaz | Bio.1.1.3 | Fake-class5191 | 100.0 | 1 |

| 15 | 17c27f6b | Diane | Hall | Bio.1.2.1 | Fake-class9903 | 100.0 | 22 |

| 16 | 26bd8d3f | Catherine | Peterson | Bio.1.2.1 | Fake-class9903 | 100.0 | 22 |

| 17 | 37054f91 | Michelle | Thomas | Bio.1.2.1 | Fake-class9903 | 100.0 | 22 |

| 18 | 3ae9501e | Charles | Park | Bio.1.2.1 | Fake-class9903 | 100.0 | 22 |

| 19 | 1f2dd468 | Roberta | Smith | Bio.1.2.1 | Fake-class8861 | 100.0 | 13 |

Overall Performance by Standard:#

Question: Who are the lowest performing students for each standard?

# Get lowest 5 students for each standard

bottom_5_students = get_top_n_students(performance_by_standard, n=5, upper=False)

print("Bottom 5 Performing Students for Each Standard (first four shown):")

display(bottom_5_students.head(20))

Bottom 5 Performing Students for Each Standard (first four shown):

| student_id | first_name | last_name | standard | class_name | avg_performance | total_questions | |

|---|---|---|---|---|---|---|---|

| 0 | 102354b4 | Charles | Brown | Bio.1.1.1 | Fake-class5191 | 0.0 | 30 |

| 1 | 11b2225e | Julia | Morris | Bio.1.1.1 | Fake-class5191 | 0.0 | 30 |

| 2 | 1440a1b6 | Jake | Sutton | Bio.1.1.1 | Fake-class5191 | 0.0 | 30 |

| 3 | 1227c936 | Christina | Galvan | Bio.1.1.1 | Fake-class3549 | 0.0 | 20 |

| 4 | 01d86cf1 | Michael | Perez | Bio.1.1.1 | Fake-class3907 | 0.0 | 3 |

| 5 | 102354b4 | Charles | Brown | Bio.1.1.2 | Fake-class5191 | 0.0 | 25 |

| 6 | 0b4a7ae3 | Derek | Little | Bio.1.1.2 | Fake-class9903 | 0.0 | 3 |

| 7 | 0b9f19df | Cassandra | Brown | Bio.1.1.2 | Fake-class9903 | 0.0 | 3 |

| 8 | 0d5c1de7 | Michelle | Clark | Bio.1.1.2 | Fake-class9903 | 0.0 | 3 |

| 9 | 102354b4 | Charles | Brown | Bio.1.1.2 | Fake-class9903 | 0.0 | 3 |

| 10 | 01d86cf1 | Michael | Perez | Bio.1.1.3 | Fake-class3907 | 0.0 | 4 |

| 11 | 030f3b3b | Kenneth | Rodriguez | Bio.1.1.3 | Fake-class3907 | 0.0 | 4 |

| 12 | 013c5340 | Garrett | Perez | Bio.1.1.3 | Fake-class7747 | 0.0 | 2 |

| 13 | 0584887e | Kristina | Taylor | Bio.1.1.3 | Fake-class5191 | 0.0 | 1 |

| 14 | 078a6cba | Danielle | Sherman | Bio.1.1.3 | Fake-class5191 | 0.0 | 1 |

| 15 | 05650d90 | Wayne | Perry | Bio.1.2.1 | Fake-class2111 | 0.0 | 26 |

| 16 | 0b4a7ae3 | Derek | Little | Bio.1.2.1 | Fake-class9903 | 0.0 | 11 |

| 17 | 0b9f19df | Cassandra | Brown | Bio.1.2.1 | Fake-class9903 | 0.0 | 11 |

| 18 | 102354b4 | Charles | Brown | Bio.1.2.1 | Fake-class9903 | 0.0 | 11 |

| 19 | 11b2225e | Julia | Morris | Bio.1.2.1 | Fake-class9903 | 0.0 | 11 |

Overall Performance by Standard:#

Question: Which standards are the most difficult as measured by average performance?

Standards ranked by difficulty#

# Calculate average performance for each standard (using the anonymized df instead of df)

standard_difficulty = anonymized_df2.groupby('standard').agg({

'is_correct': ['mean', 'count'],

'student_id': 'nunique'

}).reset_index()

# Flatten column names

standard_difficulty.columns = ['standard', 'avg_performance', 'total_questions', 'unique_students']

# Convert average performance to percentage and round

standard_difficulty['avg_performance'] = (standard_difficulty['avg_performance'] * 100).round(2)

# Sort by average performance (ascending) to see most difficult standards first

standard_difficulty = standard_difficulty.sort_values('avg_performance', ascending=True)

# Add a difficulty rank

standard_difficulty['difficulty_rank'] = standard_difficulty['avg_performance'].rank(method='dense', ascending=True)

# Reset index again

standard_difficulty.reset_index(drop=True)

print("Standards Ranked by Difficulty (Most Difficult First):")

display(standard_difficulty)

Standards Ranked by Difficulty (Most Difficult First):

| standard | avg_performance | total_questions | unique_students | difficulty_rank | |

|---|---|---|---|---|---|

| 36 | Chm.1.2.2 | 16.67 | 192 | 74 | 1.0 |

| 92 | PSc.3.1.1 | 17.80 | 236 | 59 | 2.0 |

| 70 | EEn.2.4.1 | 21.59 | 602 | 43 | 3.0 |

| 85 | PSc.2.2.2 | 22.17 | 2436 | 59 | 4.0 |

| 55 | Chm.3.1.3 | 22.40 | 384 | 74 | 5.0 |

| ... | ... | ... | ... | ... | ... |

| 66 | EEn.2.1.1 | 67.26 | 2236 | 43 | 98.0 |

| 67 | EEn.2.1.2 | 67.44 | 86 | 43 | 99.0 |

| 57 | Chm.3.2.2 | 68.18 | 44 | 22 | 100.0 |

| 62 | EEn.1.1.1 | 74.42 | 602 | 43 | 101.0 |

| 58 | Chm.3.2.3 | 75.42 | 472 | 107 | 102.0 |

104 rows × 5 columns

Overall Performance by Standard:#

Question: Which standards are students most proficient in?

#Highest scoring standards

display(standard_difficulty.sort_values(by='difficulty_rank', ascending=False))

| standard | avg_performance | total_questions | unique_students | difficulty_rank | |

|---|---|---|---|---|---|

| 58 | Chm.3.2.3 | 75.42 | 472 | 107 | 102.0 |

| 62 | EEn.1.1.1 | 74.42 | 602 | 43 | 101.0 |

| 57 | Chm.3.2.2 | 68.18 | 44 | 22 | 100.0 |

| 67 | EEn.2.1.2 | 67.44 | 86 | 43 | 99.0 |

| 66 | EEn.2.1.1 | 67.26 | 2236 | 43 | 98.0 |

| ... | ... | ... | ... | ... | ... |

| 55 | Chm.3.1.3 | 22.40 | 384 | 74 | 5.0 |

| 85 | PSc.2.2.2 | 22.17 | 2436 | 59 | 4.0 |

| 70 | EEn.2.4.1 | 21.59 | 602 | 43 | 3.0 |

| 92 | PSc.3.1.1 | 17.80 | 236 | 59 | 2.0 |

| 36 | Chm.1.2.2 | 16.67 | 192 | 74 | 1.0 |

104 rows × 5 columns

Standard performance overview#

Question Type Difficulty#

Question: What are the difficulty rankings for different question types?

What item types are in Mastery Connect?

# Calculate difficulty for each question type

question_type_difficulty = anonymized_df2.groupby('question_type').agg({

'is_correct': ['mean', 'count'],

'student_id': 'nunique'

}).reset_index()

# Flatten column names

question_type_difficulty.columns = ['question_type', 'avg_performance', 'total_questions', 'unique_students']

# Convert average performance to percentage and round

question_type_difficulty['avg_performance'] = (question_type_difficulty['avg_performance'] * 100).round(2)

# Sort by average performance (ascending) to see most difficult question types first

question_type_difficulty = question_type_difficulty.sort_values('avg_performance', ascending=True)

# Add a difficulty rank

question_type_difficulty['difficulty_rank'] = question_type_difficulty['avg_performance'].rank(method='dense', ascending=True)

print("Question Types Ranked by Difficulty (Most Difficult First):")

display(question_type_difficulty)

Question Types Ranked by Difficulty (Most Difficult First):

| question_type | avg_performance | total_questions | unique_students | difficulty_rank | |

|---|---|---|---|---|---|

| 1 | Classification | 0.00 | 32 | 16 | 1.0 |

| 0 | Choice Matrix | 8.05 | 447 | 130 | 2.0 |

| 2 | Cloze Association | 12.93 | 379 | 130 | 3.0 |

| 4 | Highlight | 13.01 | 292 | 130 | 4.0 |

| 3 | Cloze Dropdown | 14.71 | 136 | 81 | 5.0 |

| 6 | Match List | 14.82 | 2855 | 130 | 6.0 |

| 7 | Multi Select | 18.71 | 930 | 233 | 7.0 |

| 5 | Label Image with Drag and Drop | 19.62 | 2156 | 210 | 8.0 |

| 11 | Order List | 32.79 | 369 | 130 | 9.0 |

| 8 | Multiple Choice | 40.15 | 177247 | 709 | 10.0 |

| 12 | Sort List | 45.12 | 246 | 81 | 11.0 |

| 10 | Multiple Choice Question | 46.74 | 60075 | 442 | 12.0 |

| 9 | Multiple Choice (Single Response) | 50.96 | 13674 | 43 | 13.0 |

Question type difficulty visualization#

Student Progress Overtime:#

Question: How has students progress changed over time

# Using anonymized df

from src.utils import display_performance_figure

# Check if we have a date column

if 'date' not in anonymized_df2.columns:

# Extract date from test_id if necessary

anonymized_df2['date'] = pd.to_datetime(anonymized_df2['test_id'].str.split('_').str[-1], format='%Y%m%d')

# Ensure date is in datetime format

anonymized_df2['date'] = pd.to_datetime(anonymized_df2['date'])

# display_performance_figure(anonymized_df2, performance_trend_df)